If you're as huge fan of reddit as I am, then you'll have noticed that many titles have "(Pic)", "[Picture]", or "(Video)" after them. It means that the content the link points to has a picture or a video in it. Sometimes I just want to quickly browse through all the pics or vids but unfortunately reddit's search is broken and there is really no good way to see the best pics and videos on reddit.

I decided to solve this problem and create Reddit Media website, which monitors reddit's front page, collects picture and video links, and builds an archive of them over time. In fact I just launched it at redditmedia.com.

I developer this website very quickly and dirty by just throwing code together. I didn't worrying about code quality or what others will think about the code. I like to get things done rather than making my code perfect. You can't beat running code.

I will release full source code of website with all the programs that generating the site. Also I will blog how the tools work and what ideas I used.

Update: Done! The site is up at reddit media: intelligent fun online.

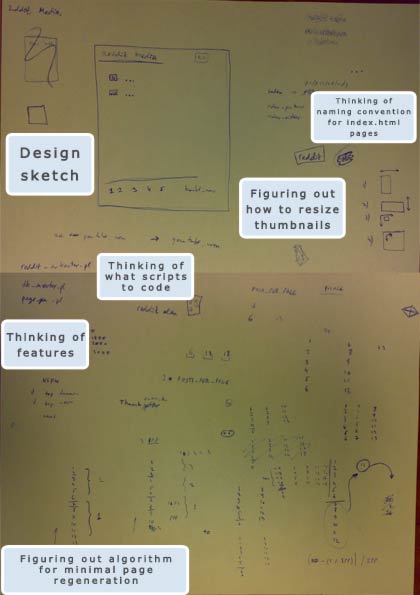

Reddit Media Website's Technical Design Sketch

I use DreamHost shared hosting to run this website. Overall it is great hosting company and I have been with them for more than a year now. The best part is that you get shell access. Unfortunately a downsite is that as it's shared hosting, sometimes the server gets overloaded and serving of dynamic pages can become slow.

I want the new website to be as fast as possible even when the server is loaded. I do not want any dynamic parsing to be involved when loading the website. Because of this I will go with generating static HTML pages for the content.

A Perl script will run every 30 mins from crontab, get reddit.com website, extract titles and URLs. Another script will then add the titles to the lightweight sqlite on-disk database in case I ever want to make the website dynamic. And the third script will use the entries in the database and generate HTML pages.

Technical Design

An experienced user might ask if this design does not have a race-condition – what happens at the moment when the new static page is generated and user loads the same page. Isn't there a race condition? The answer is no. I've thought about it and the way static pages will be updates is they will first be written to temporary files, then moved in place of the existing ones. The website runs on Linux operating system and by looking up `man 2 rename' you'll find that:

If newpath already exists it will be atomically replaced (subject to a few conditions - see ERRORS below), so that there is no point at which another process attempting to access(2,5) newpath will find it missing.

TLDR: rename system call is atomic, which means we have no trouble with race conditions.

Reddit provides RSS feed to the front page news. It has 25 latest news and maybe 5 of them are media links. That is not enough links to launch the website. People visiting the site will get bored with just 5 links and only 10 new added daily. I need more content to launch the site. I could wait and launch the site later when articles have piled up but I do not want to wait and I want to launch it ASAP!

Here's what I'm gonna do. First, I'll create a script that will go through all the pages on reddit looking for picture and video links, and insert the found items in the database. It will match patterns in link titles and domains which contain only media (such as flickr or xkcd).

Here is the list of patterns I came up with that describe pictures and videos:

- picture

- pic

- image

- photo

- comic

- chart

- video

- vid

- clip

- film

- movie

And here are the domains that exclusively contain media:

- youtube.com

- video.google.com

- liveleak.com

- break.com

- metacafe.com

- brightcove.com

- dailymotion.com

- flicklife.com

- flurl.com

- gofish.com

- ifilm.com

- livevideo.com

- video.yahoo.com

- photobucket.com

- flickr.com

- xkcd.com

To write this script I'll use LWP::UserAgent to get HTML contents and HTML::TreeBuilder to extract titles and links.

This script will output the found items in a human readable format, ready for input to another script, which will absorb this information and put it in the SQLite database. A true Unix hacker's approach!

This script is called reddit_extractor.pl. It takes one optional argument which is number of reddit pages to extract links from. If no argument is specified, it goes through all reddit pages until it hits the last one. For example, specifying 1 as the first argument makes it parse just the front page. I can now run this script periodically to find links on the front page. No need for additionally parsing RSS.

There is one constant in this script which can be changed. This constant, VOTE_THRESHOLD, sets the threshold of how many votes a post should have received to be collected by the program. I had to add it because when digging (heh) through older reddit's posts there are a lot of links with 1 or 2 votes, which means it really wasn't that good.

The script outputs each media post matching a pattern or domain in the following format:

title (type, user, reddit id, url)

- title is the title of the article

- type is the media type. It can be one of 'video', 'videos', 'picture', 'pictures'. It's plural if the title contains "pics" or "videos" (plural) form of media.

- user is the reddit user who posted the link

- reddit id is the unique identifier reddit uses to identify its links

- url is the url to the media

Script 'reddit_extractor.pl' can be downloaded here:

catonmat.net/ftp/reddit_extractor.perl

Then I will create a script that takes this input and puts it into SQLite database. It is so trivial that there is nothing much to write about it.

This script will also be written in Perl programming langauge and will use just DBI and DBD::SQLite modules for accessing the SQLite database.

The script will create an empty database on the first invocation, read the data from stdin and insert the data in the database.

The database design is dead simple. It contains just two tables:

- reddit which stores the links found on reddit, and

- reddit_status which contains some info about how the page generator script used the reddit table

Going into more details, reddit table contains the following colums:

- id - the primary key of the table

- title - title of the media link found on reddit

- url - url to the media

- reddit_id - id reddit uses to identify it's posts (used by my scripts to link to comments)

- user - username of the person who posted the link on reddit

- type - type of the media, can be: 'video', 'videos', 'picture', 'pictures'. It's plural if the title contains "pics" or "videos" (plural) form of media.

- date_added - the date the entry was added to the database

The other table, reddit_status contains just two colums:

- last_id - the last id in the reddit table which the generator script used for generating the site

- last_run - date the of last successful run of the generator script

This script is called 'db_inserter.pl'. It does not take any arguments but has one constant which has to be changed before using. This constant, DATABASE_PATH, defined the path to SQLite database. As I mentioned, it is allowed for the database not to exist, this script will create one on the first invocation.

These two scripts used together can now be periodically run from crontab to monitor the reddit's front page and insert the links in the database. It can be done with as simple command as:

reddit_extractor.pl 1 | db_inserter.pl

Script 'db_inserter.pl' can be downloaded here:

catonmat.net/ftp/db_inserter.perl

Now that we have our data, we just need to display it in a nice way. That's the job of generator script.

The generator script will be run after the previous two scripts have been run together and it will use information in the database to build static HTML pages.

Since generating static pages is computationally expensive, the generator has to be smart enough to minimize regeneration of already generated pages. I commented the algorithm (pretty simple algorithm) that minimizes regeneration script carefully, you can take a look at 'generate_pages' function in the source.

The script generates three kinds of pages at the moment - pages containing all pictures and videos, pages containing just pictures and pages containing just videos.

There is a lot of media featured on reddit and as the script keeps things cached, the directory sizes can grow pretty quickly. If a file system which performs badly with thousands of files in a single directory is used, the runtime of the script can degrade. To avoid this, the generator stores cached reddit posts in subdirectories based on the first char of their file name. For example, if a filename of a cached file is 'foo.bar', then it stores the file in /f/foo.bar directory.

The other thing this script does is locate thumbnail images for media. For example, for YouTube videos, it would construct URL to their static thumbnails. For Google Video I could not find a public service for easily getting the thumbnail. The only way I found to get a thumbnail of Google Video is to get the contents of the actual video page and extract it from there. The same applies to many other video sites which do not tell developers how to get the thumbnail of the video. Because of this I had to write a Perl module 'ThumbExtractor.pm', which given a link to a video or picture, extracts the thumbnail.

'ThumbExtractor.pm' module can be viewed here:

<a href="https://catonmat.net/ftp/ThumbExtractor.pm" title="thumbnail extractor (perl module, reddit media generator)>catonmat.net/ftp/ThumbExtractor.pm

Some of the links on reddit contain the link to actual image. I wouldn't want the reddit media site to take long to load, that's why I set out to seek a solution for caching small thumbnails on the server the website is generated.

I had to write another module 'ThumbMaker.pm' which goes and downloads the image, makes a thumbnail image of it and saves to a known path accessible from web server.

'ThumbMaker.pm' module can be viewed here:

thumbnail maker (perl module, reddit media generator)

To manipulate the images (create thumbnails), the ThumbMaker package uses Netpbm open source software.

Netpbm is a toolkit for manipulation of graphic images, including conversion of images between a variety of different formats. There are over 300 separate tools in the package including converters for about 100 graphics formats. Examples of the sort of image manipulation we're talking about are: Shrinking an image by 10%; Cutting the top half off of an image; Making a mirror image; Creating a sequence of images that fade from one image to another.

You will need this software (either compile yourself, or get the precompiled packages) if you want to run the the reddit media website generator scripts.

To use the most common image operations easily, I wrote a package 'Netpbm.pl', which provides operations like resize, cut, add border and others.

'Netpbm.pm' package can be viewed here:

netpbm image manipulation (perl module, reddit media generator)

I hit an interesting problem while developing the ThumbExtractor.pm and ThumbMaker.pm packages - what should they do if the link is to a regular website with just images? There is no simple way to download the right image which the website wanted to show to users. I thought for a moment and came up with an interesting but simple algorithm which finds "the best" image on the site. It retrieve ALL the images from the site and find the one with biggest dimensions and make a thumbnail out of it. It is pretty obvious, pictures posted on reddit are big and nice, so the biggest picture on the site must be the one that was meant to be shown. A more advanced algorithm would analyze it's location on the page and add weigh to the score of how good the image is, depending on where it is located. The more in the center of the screen, the higher score.

For this reason I developed yet another Perl module called 'ImageFinder.pm'. See the 'find_best_image' subroutine to see how it works.

'ImageFinder.pm' module can be viewed here:

best image finder (perl module, reddit media generator)

The generator script also uses CPAN's Template::Toolkit package for generating HTML pages from templates.

The name of the generator script is 'page_gen.pl'. It takes one optional argument 'regenerate' which if specified clears the cache and regenerates all the pages anew. It is useful when templates are updated or changes are made to thumbnail generator.

Program 'page_gen.pl' can be viewed here:

reddit media page generator (perl script)

While developing software I like to take a lot of notes and solve problems that come up on paper. For example, while working on this site, I had to solve a problem how to regenerate existing pages minimally and how to resize thumbnails so they looked nice.

Here is how many tiny notes I tool while working on this site:

(sorry for the quality again, i took the picture with camera phone with two shots and stitched it together with image editor)

(sorry for the quality again, i took the picture with camera phone with two shots and stitched it together with image editor)

The final website is at redditmedia.com address (now moved to http://reddit.picurls.com). Click http://reddit.picurls.com to visit it!

Here are all the scripts together with documentation.

Download Reddit Media Website Generator Scripts

All scripts in a single .zip file

Download: reddit.media.website.generator.zip

Individual scripts

reddit_extractor.pl

Download link: catonmat.net/ftp/reddit_extractor.perl

db_inserter.pl

Download link: catonmat.net/ftp/db_inserter.perl

page_gen.pl

Download link: catonmat.net/ftp/page_gen.perl

ThumbExtractor.pm

Download link: catonmat.net/ftp/ThumbExtractor.pm

ThumbMaker.pm

Download link: catonmat.net/ftp/ThumbMaker.pm

ImageFinder.pm

Download link: catonmat.net/ftp/ImageFinder.pm

NetPbm.pm

Download link: catonmat.net/ftp/NetPbm.pm

What is reddit?

Reddit is a social news website where users decide its contents.

From their faq:

A source for what's new and popular on the web -- personalized for you. We want to democratize the traditional model by giving editorial control to the people who use the site, not those who run it. Your votes train a filter, so let reddit know what you liked and disliked, because you'll begin to be recommended links filtered to your tastes. All of the content on reddit is from users who are rewarded for good submissions (and punished for bad ones) by their peers; you decide what appears on your front page and which submissions rise to fame or fall into obscurity.

Have fun with Reddit Media and see you next time!